How Twitter Rigged the Covid Debate The platform suppressed true information from doctors and public-health experts that was at odds with U.S. government policy. David Zweig

https://www.thefp.com/p/how-twitter-rigged-the-covid-debate?utm_source=substack&utm_medium=email

By the time reporter David Zweig got to the 10th floor conference room at Twitter Headquarters on Market Street in San Francisco, the story of the Twitter Files was already international news. Matt Taibbi, Michael Shellenberger, Leighton Woodhouse, Abigail Shrier, Lee Fang and I had revealed evidence of hidden blacklists of Twitter users; the way Twitter acted as a kind of FBI subsidiary; and how company executives rewrote the platform’s policies on the fly to accommodate political bias and pressure.

What we had yet to crack was the story of Covid.

David has spent three years reporting on Covid—specifically the underlying science, or lack thereof, behind many of our nation’s policies. For years he had noticed and criticized a bias not only in the mainstream media’s coverage of the pandemic, but also in the way it was presented on platforms like Twitter.

We couldn’t think of anyone better to tackle this story. — BW

I had always thought a primary job of the press was to be skeptical of power—especially the power of the government. But during the Covid-19 pandemic, I and so many others found that the legacy media had shown itself to largely operate as a messaging platform for our public health institutions. Those institutions operated in near total lockstep, in part by purging internal dissidents and discrediting outside experts.

Twitter became an essential alternative. It was a place where those with public health expertise and perspectives at odds with official policy could air their views—and where curious citizens could find such information. This often included other countries’ responses to Covid that differed dramatically from our own.

But it quickly became clear that Twitter also seemed to promote content that reinforced the establishment narrative, and to suppress views and even scientific evidence that ran to the contrary.

Was I imagining things? Was the pattern I and others witnessed proof of purposeful intent? An algorithm gone rogue? Or something else? In other words: When it came to Covid, and the information shared on a service used by hundreds of millions of people, what exactly was being amplified? And what was being banned or censored?

So when The Free Press asked if I would go to Twitter to peek behind the curtain, I took the first flight out of New York.

Here’s what I found.

The United States government pressured Twitter to elevate certain content and suppress other content about Covid-19 and the pandemic. Internal emails that I viewed at Twitter showed that both the Trump and Biden administrations directly pressed Twitter executives to moderate the platform’s content according to their wishes.

At the onset of the pandemic, the Trump administration was especially concerned about panic buying, and sought “help from the tech companies to combat misinformation,” according to emails sent by Twitter employees in the wake of meetings with the White House. One area of so-called misinformation: “runs on grocery stores.” The trouble is that it wasn’t misinformation: There actually were runs on goods.

And it wasn’t just Twitter. The meetings with the Trump White House were also attended by Google, Facebook, Microsoft and others.

When the Biden administration took over, its agenda for the American people can be summed up as: Be very afraid of Covid and do exactly what we say to stay safe.

In July 2021, then-U.S. Surgeon General Vivek Murthy released a 22-page advisory concerning what the World Health Organization referred to as an “infodemic,” and called on social media platforms to do more to shut down “misformation.”

“We are asking them to step up,” Murthy said. “We can’t wait longer for them to take aggressive action.”

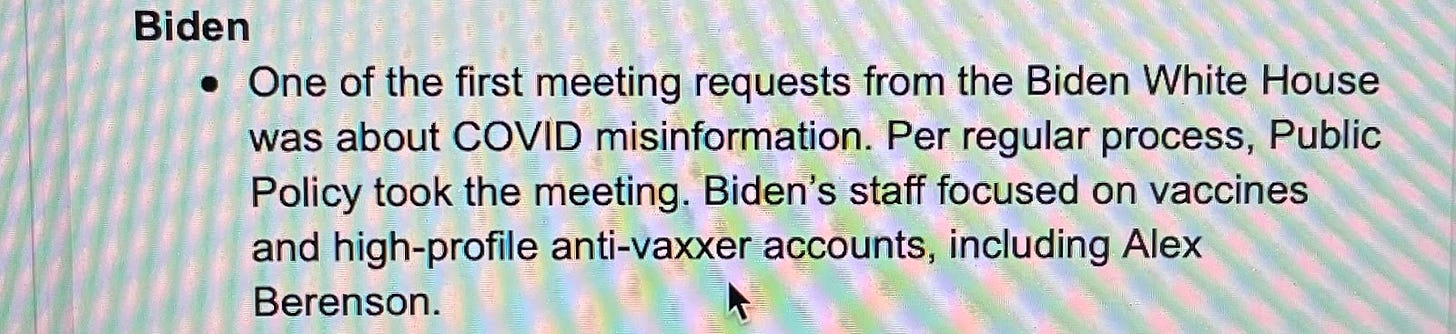

That’s the message the White House had already taken directly to Twitter executives in private channels. One of the Biden administration’s first meeting requests was about Covid, with a focus on “anti-vaxxer accounts,” according to a meeting summary by Lauren Culbertson, Twitter’s Head of U.S. Public Policy.

They were especially concerned about Alex Berenson, a journalist skeptical of lockdowns and mRNA vaccines, who had hundreds of thousands of followers on the platform:

By the summer of 2021, the day after Murthy’s memo, Biden announced publicly that social media companies were “killing people” by allowing misinformation about vaccines. Just hours later, Twitter locked Berenson out of his account, and then permanently suspended him the next month. Berenson sued Twitter. He ultimately settled with the company, and is now back on the platform. As part of the lawsuit, Twitter was compelled to provide certain internal communications. They revealed that the White House had directly met with Twitter employees and pressured them to take action on Berenson.

The summary of meetings by Culbertson, emailed to colleagues in December 2022, adds new evidence of the White House’s pressure campaign, and illustrates how it tried to directly influence what content was allowed on Twitter.

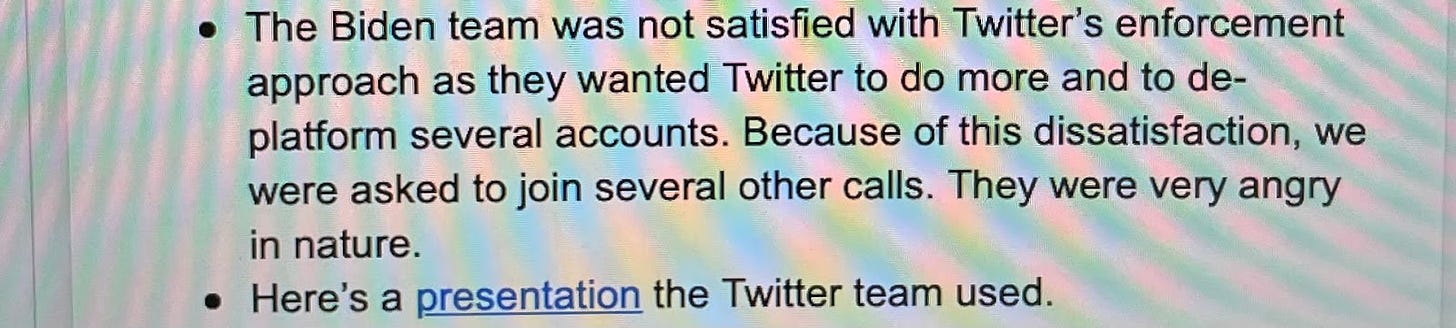

Culbertson wrote that the Biden team was “very angry” that Twitter had not been more aggressive in deplatforming multiple accounts. They wanted Twitter to do more.

Twitter executives did not fully capitulate to the Biden team’s wishes. An extensive review of internal communications at the company revealed that employees often debated moderation cases in great detail, and with more care for free speech than was shown by the government.

But Twitter did suppress views—and not just those of journalists like Berenson. Many medical and public health professionals who expressed perspectives or even cited findings from accredited academic journals that conflicted with official positions were also targeted. As a result, legitimate findings and questions about our Covid policies and their consequences went missing.

There were three serious problems with Twitter’s process.

First: Much of the content moderation on Covid, to say nothing of other contentious subjects, was conducted by bots trained on machine learning and AI. I spent hours discussing the systems with an engineer and with an executive who had been at the company for more than a year before Musk’s takeover. They explained the process in basic terms: Initially, the bots were fed information to train them on what to look for—but their searches would become more refined over time both as they scanned the platform and as they were manually updated with additional chosen inputs. At least that was the premise. Though impressive in their engineering, the bots would prove too crude for such nuanced work. When you drag a digital trawler across a social media platform, you’re not just catching cheap fish, you’re going to snag dolphins along the way.

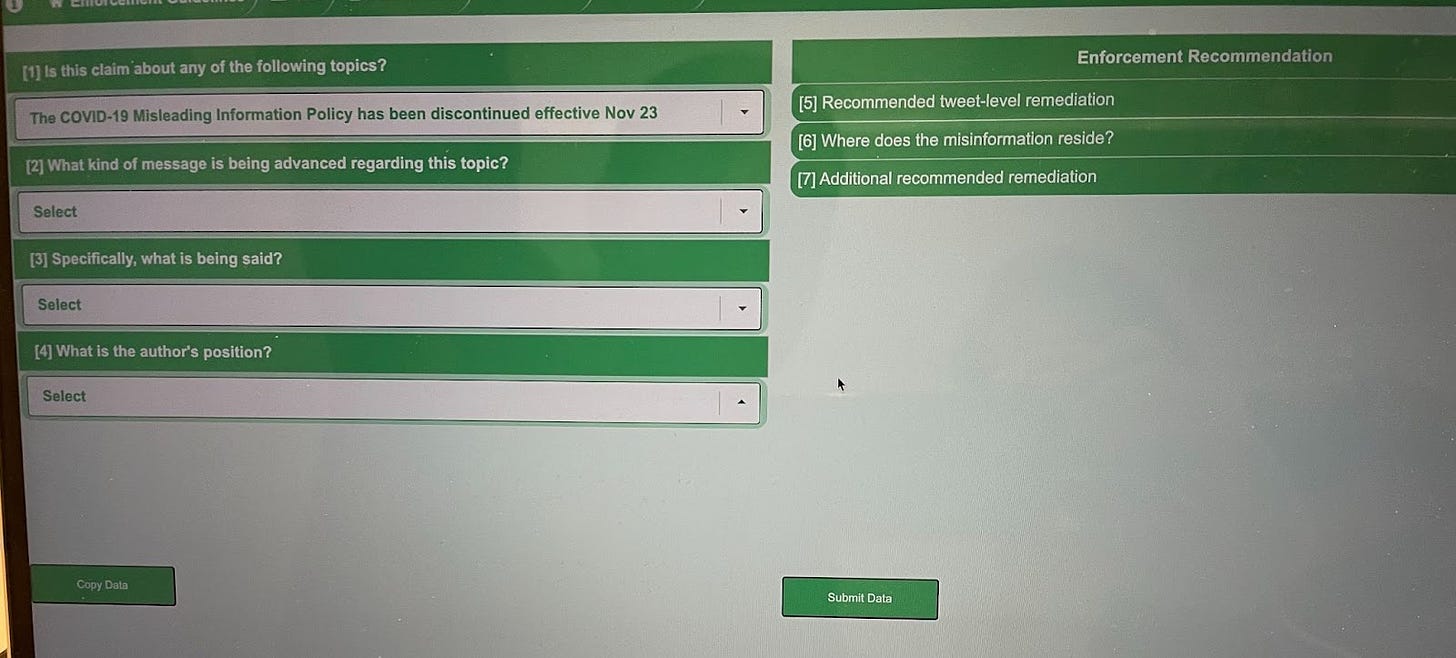

Second: Contractors operating in places like the Philippines were also moderating content. They were given decision trees to aid in their process, but tasking non-experts to adjudicate tweets on complex topics like myocarditis and mask efficacy data was destined for a significant error rate. The notion that remote workers, sitting in distant cube farms, were going to police medical information to this granular degree is absurd on its face.

Embedded below is an example template—deactivated after Musk’s arrival—of the decision tree tool that contractors used. The contractor would run through a series of questions, each with a drop down menu, ultimately guiding them to a predetermined conclusion.

Third: Most importantly, the buck stopped with higher level employees at Twitter. They chose the inputs for the bots and decision trees. They determined suspensions. And as is the case with all people and institutions, there was both individual and collective bias.

At Twitter, Covid-related bias bent heavily toward establishment dogmas. Inevitably, dissident yet legitimate content was labeled as misinformation, and the accounts of doctors and others were suspended both for tweeting opinions and demonstrably true information.

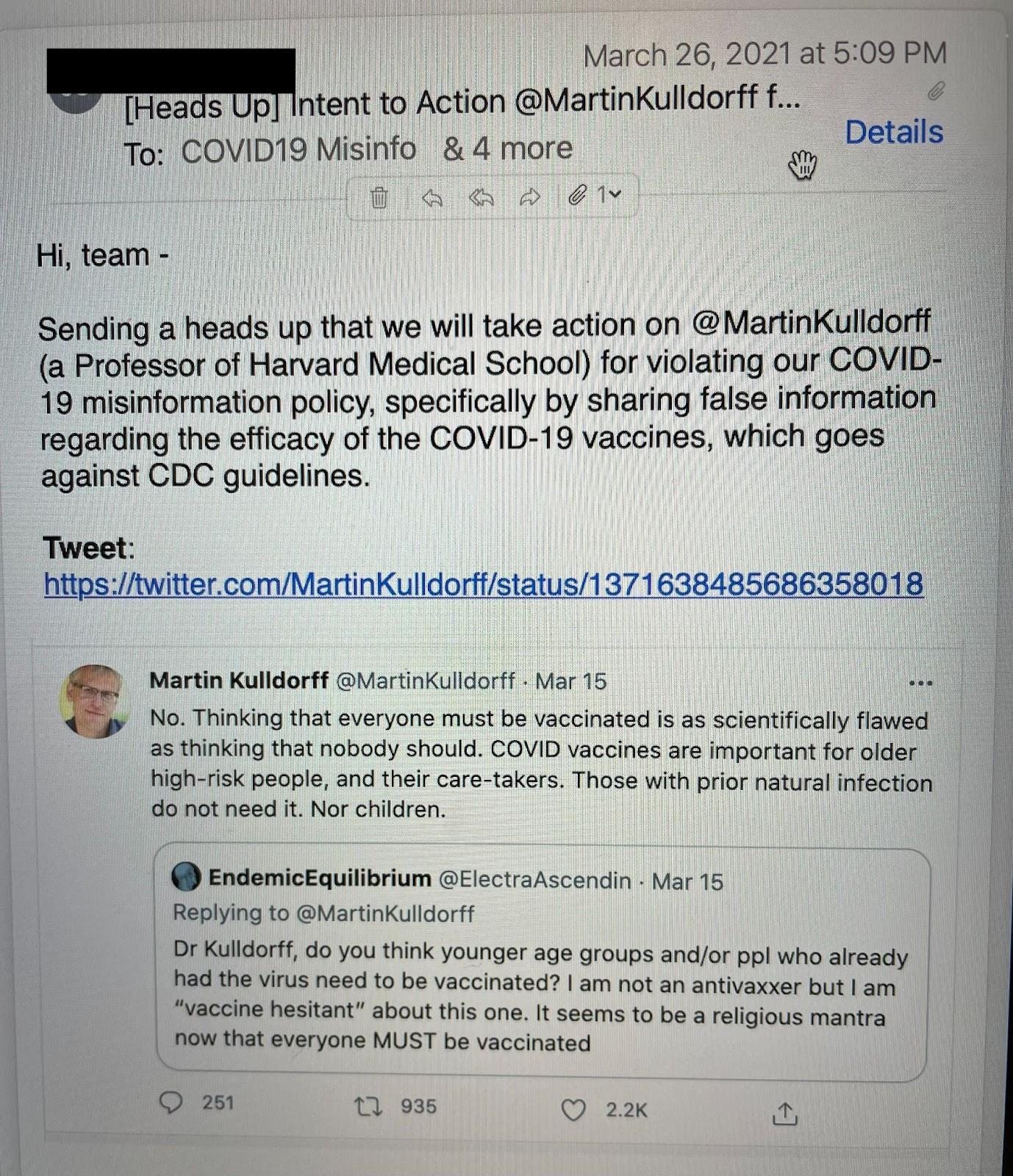

Take, for example, Martin Kulldorff, an epidemiologist at Harvard Medical School. Dr. Kulldorff often tweeted views at odds with U.S. public health authorities and the American left, the political affiliation of nearly the entire staff at Twitter.

Here is one such tweet, from March 15, 2021, regarding vaccination.

Internal emails show an “intent to action” by a Twitter moderator, saying Kulldorff’s tweet violated the company’s Covid-19 misinformation policy, and claimed he shared “false information.”

But Kulldorff’s statement was an expert’s opinion—one that happened to be in line with vaccine policies in numerous other countries.

Yet it was deemed “false information” by Twitter moderators merely because it differed from CDC guidelines. After Twitter took action, Kulldorff’s tweet was slapped with a “misleading” label and all replies and likes were shut off, throttling the tweet’s ability to be seen and shared by others, a core function of the platform.

In my review of internal files, I found numerous instances of tweets about vaccines and pandemic policies labeled as “misleading” or taken down entirely, sometimes triggering account suspensions, simply because they veered from CDC guidance or differed from establishment views.

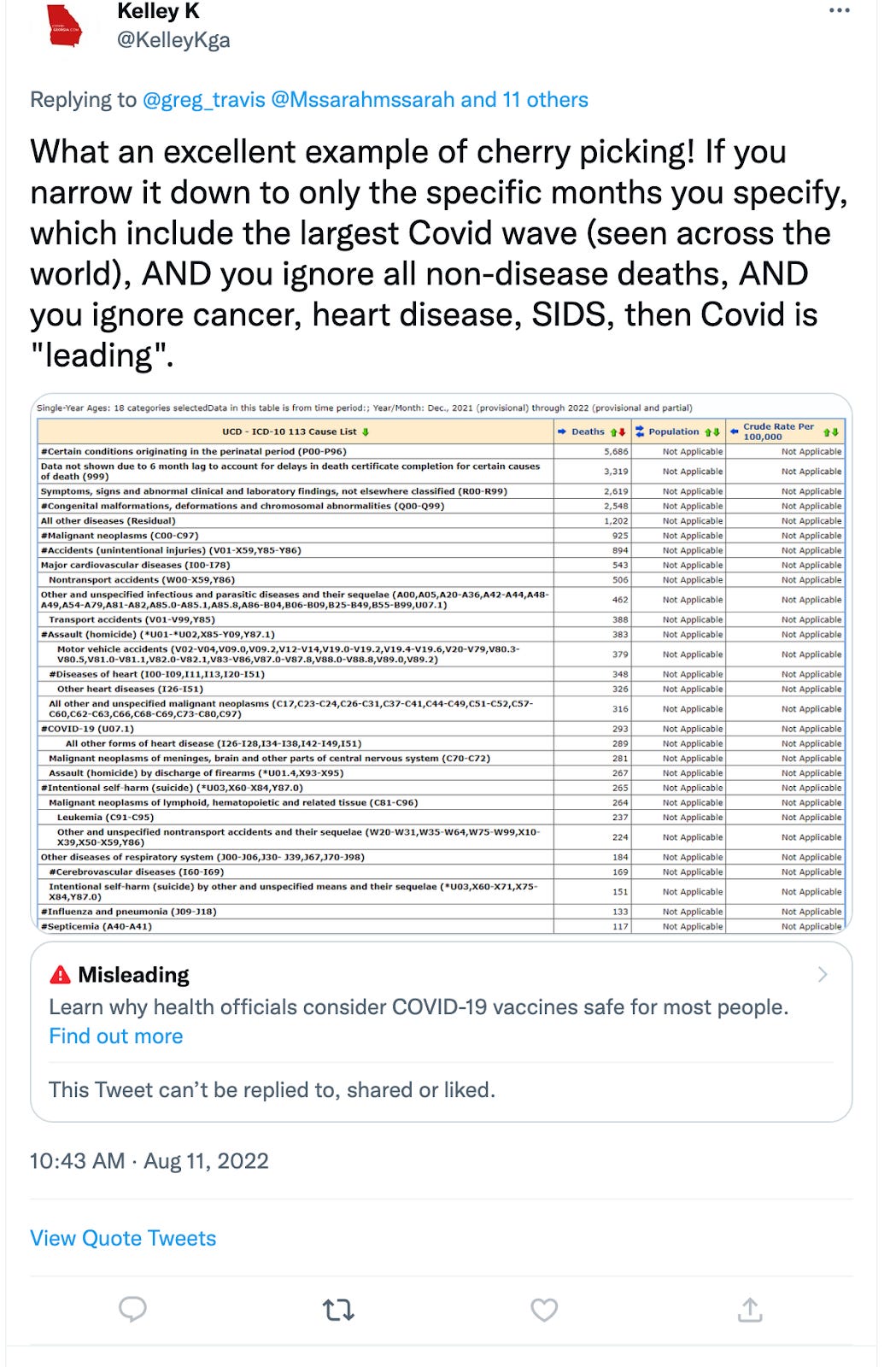

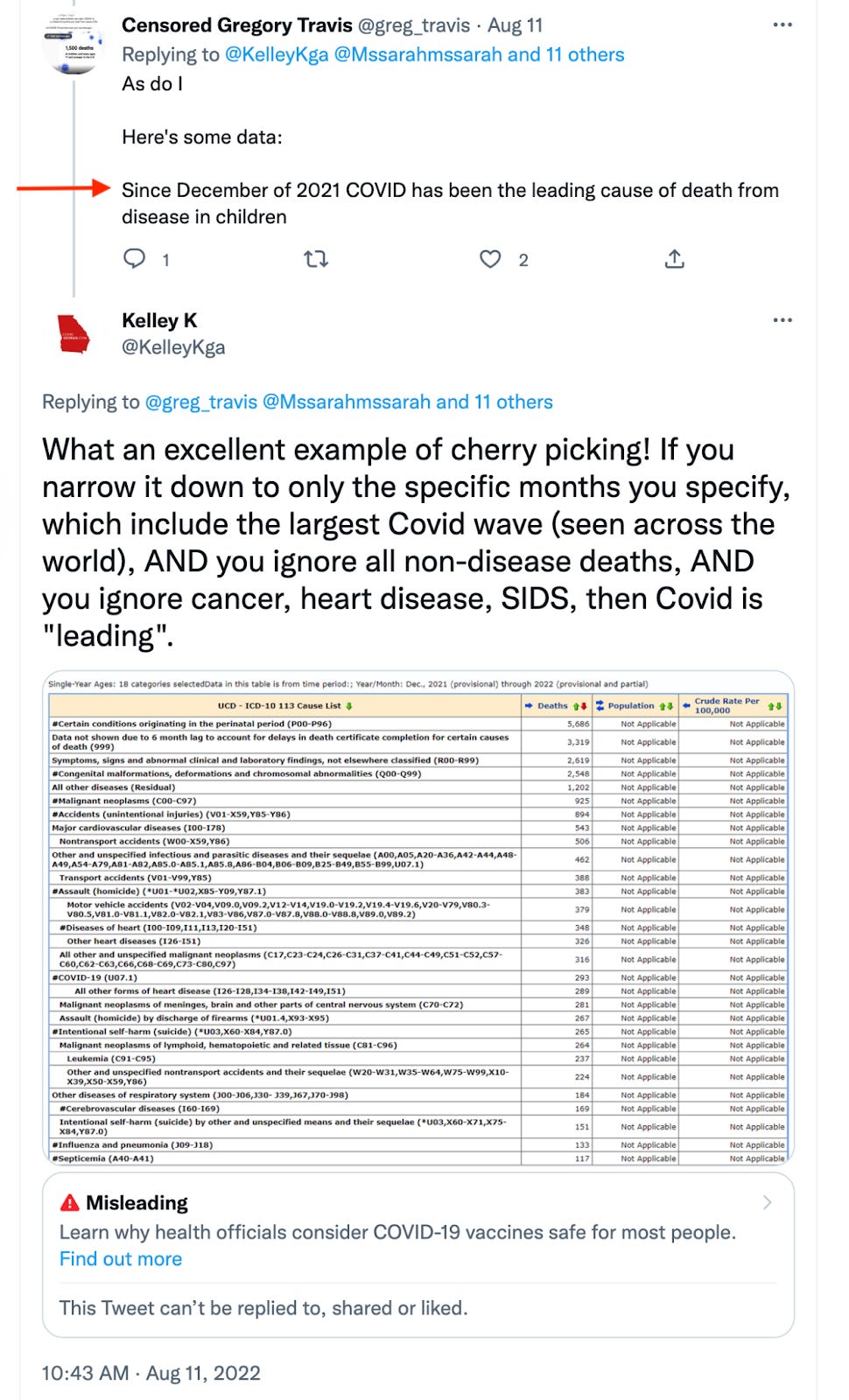

For example, a tweet by @KelleyKga, a self-proclaimed public health fact checker with more than 18,000 followers, was flagged as “misleading,” and replies and likes disabled, for showing that Covid was not the leading cause of death in children, even though it cited the CDC’s own data.

Internal records showed that a bot had flagged the tweet, and that it received many “tattles” (what the system amusingly called reports from users). That triggered a manual review by a human who—despite the tweet showing actual CDC data—nevertheless labeled it “misleading.” Tellingly, the tweet by @KelleyKga that was labeled “misleading” was a reply to a tweet that contained actual misinformation.

Covid has never been the leading cause of death from disease in children. Yet that tweet not only remains on the platform, it is without any sort of “misleading” label.

Whether by humans or algorithms, content that was contrarian but true, and the people who conveyed that content, were still subject to getting flagged and suppressed.

Sometimes this was done covertly. As reported earlier by The Free Press, Dr. Jay Bhattacharya, a Stanford professor of health policy who argued for focused protection of the vulnerable and an end to lockdowns, was secretly put on a Trends Blacklist.

But many instances were public facing. The author of the tweet embedded below is a physician who runs the Infectious Disease Ethics Twitter account. The tweet was labeled as “misleading” even though it was referring to the results of a peer-reviewed study that found an association between the mRNA vaccines and cardiac arrests in young people in Israel.

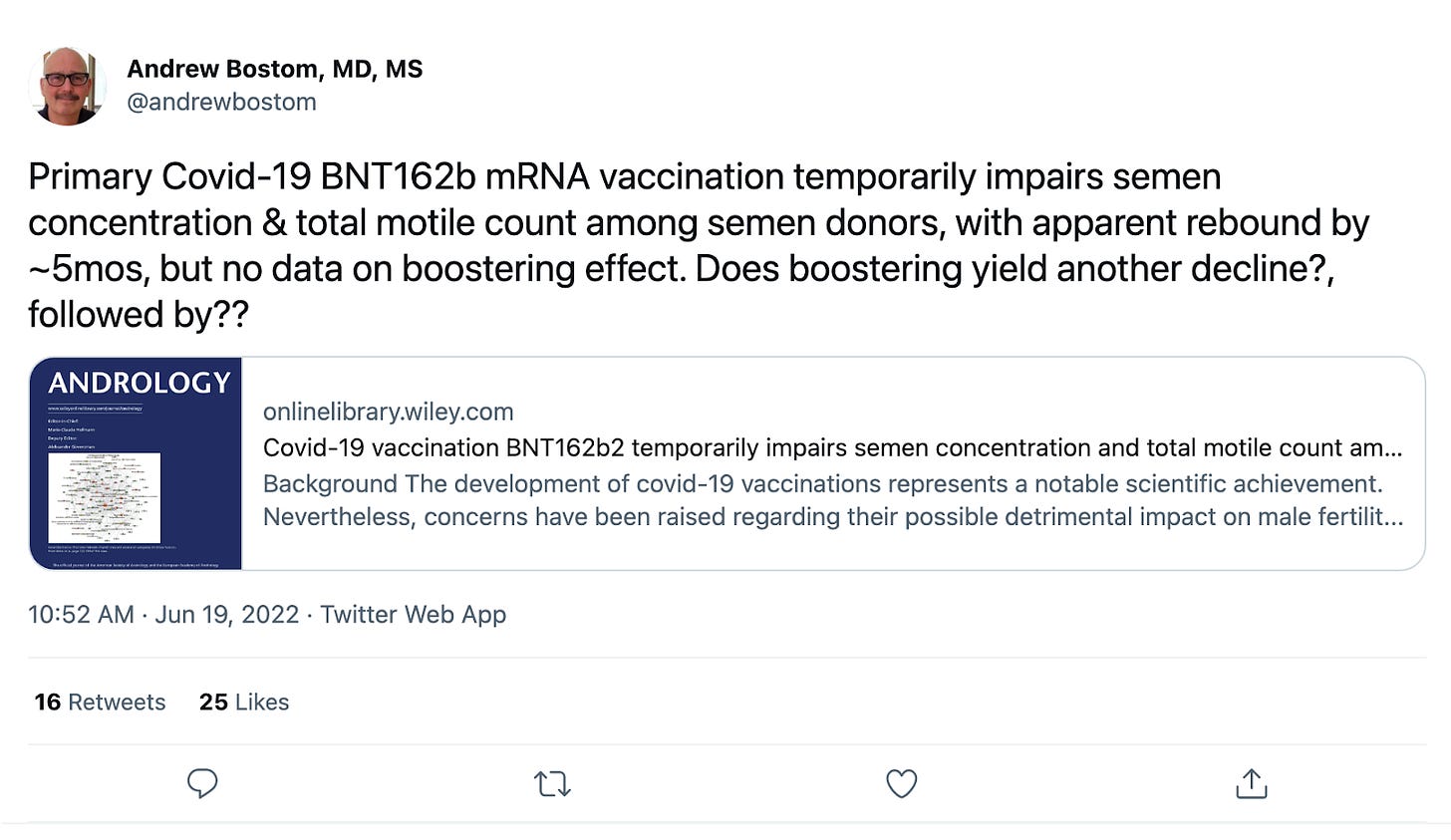

Andrew Bostom, a Rhode Island physician, was permanently suspended from Twitter after receiving multiple strikes for misinformation. One of his strikes was for a tweet referencing the results from a peer-reviewed study that found a deterioration in sperm concentration and total motile count in sperm donors following mRNA vaccination.

Twitter’s logs revealed that an internal audit, conducted by Twitter after Bostom’s attorney contacted the company, found that only one of Bostom’s five violations were valid.

The one Bostom tweet found to still be in violation of Twitter policy cited data and drew a conclusion that was totally legitimate. The problem was only that it was inconvenient to the public health establishment’s narrative about the relative risks of flu versus Covid in children.

This tweet was flagged not only by a bot but also manually, by a human being—which goes a long way to illuminating both the algorithmic and human bias at Twitter. “It seems grossly unfair,” Bostom told me when I called to share with him my findings. “What’s the remedy? What am I supposed to do?” (His account was restored, along with a number of others, on Christmas Day.)

Another example of human bias run amok was the reaction to the below tweet by then-President Trump. Many Trump tweets led to extensive internal debates at the company, and this one was no different.

In a surreal exchange, Jim Baker, at the time Twitter’s Deputy General Counsel, asks why telling people to not be afraid wasn’t a violation of Twitter’s Covid-19 misinformation policy.

In his reply, Yoel Roth, Twitter’s former head of Trust & Safety, had to explain that optimism wasn’t misinformation.

Remember @KelleyKga with the CDC data tweet? Twitter’s response to her in an exchange about why her tweet was labeled as “misleading” is clarifying:

“We will prioritize review and labeling of content that could lead to increased exposure or transmission.”

Throughout the pandemic, Twitter repeatedly propped up the official government line that prioritizing mitigation over other concerns was the best approach to the pandemic. Information that challenged that view—for example, that pointed out the low risk children faced from the virus, or that raised questions about vaccine safety or effectiveness—was subject to moderation and suppression.

This isn’t simply the story of the power of Big Tech or of the legacy press to shape our debate—though it is most certainly that.

In the end it is equally the story of children across the country who were prevented from attending school, especially kids from underprivileged backgrounds who are now miles behind their more well-off peers in math and English. It’s the story of the people who died alone. It’s the story of the small businesses that shuttered. It’s even the story of the perpetually-masked 20-year-olds in the heart of San Francisco for whom there has never been a return to normal.

If Twitter had allowed the kind of open forum for debate that it claimed to believe in, could any of this have turned out differently?

Comments are closed.