Is Wikipedia Politically Biased?by David Rozado

https://manhattan.institute/article/is-wikipedia-politically-biased

Executive Summary

- This work aims to determine whether there is evidence of political bias in English-language Wikipedia articles.

- Wikipedia is one of the most popular domains on the World Wide Web, with hundreds of millions of unique users per month. Wikipedia content is also routinely employed in the training of Large Language Models (LLMs).

- To study political bias in Wikipedia content, we analyze the sentiment (positive, neutral, or negative) with which a set of target terms (N=1,628) with political connotations (e.g., names of recent U.S. presidents, U.S. congressmembers, U.S. Supreme Court justices, or prime ministers of Western countries) are used in Wikipedia articles.

- We do not cherry-pick the set of terms to be included in the analysis but rather use publicly available preexisting lists of terms from Wikipedia and other sources.

- We find a mild to moderate tendency in Wikipedia articles to associate public figures ideologically aligned right-of-center with more negative sentiment than public figures ideologically aligned left-of-center.

- These prevailing associations are apparent for names of recent U.S. presidents, U.S. Supreme Court justices, U.S. senators, U.S. House of Representatives congressmembers, U.S. state governors, Western countries’ prime ministers, and prominent U.S.-based journalists and media organizations.

- This trend is common but not ubiquitous. We find no evidence of it in the sentiment with which names of U.K. MPs and U.S.-based think tanks are used in Wikipedia articles.

- We also find prevailing associations of negative emotions (e.g., anger and disgust) with right-leaning public figures; and positive emotions (e.g., joy) with left-leaning public figures.

- These trends constitute suggestive evidence of political bias embedded in Wikipedia articles.

- We find some of the aforementioned political associations embedded in Wikipedia articles popping up in OpenAI’s language models. This is suggestive of the potential for biases in Wikipedia content percolating into widely used AI systems.Wikipedia’s neutral point of view (NPOV) policy aims for articles in Wikipedia to be written in an impartial and unbiased tone. Our results suggest that Wikipedia’s NPOV policy is not achieving its stated goal of political-viewpoint neutrality in Wikipedia articles.

- This report highlights areas where Wikipedia can improve in how it presents political information. Nonetheless, we want to acknowledge Wikipedia’s significant and valuable role as a public resource. We hope this work inspires efforts to uphold and strengthen Wikipedia’s principles of neutrality and impartiality.

Introduction

Wikipedia.org is the seventh-most visited domain on the World Wide Web, amassing more than 4 billion visits per month across its extensive collection of more than 60 million articles in 329 languages.[1] Launched on January 15, 2001, by Jimmy Wales and Larry Sanger, Wikipedia has evolved over the past two decades into an indispensable information resource for millions of users worldwide. Given Wikipedia’s immense reach and influence, the accuracy and neutrality of its content are of paramount importance.

In recent years, Wikipedia’s significance has grown beyond its direct human readership, as its content is routinely employed in the training of Large Language Models (LLMs) such as ChatGPT.[2] Consequently, any biases present in Wikipedia’s content may be absorbed into the parameters of contemporary AI systems, potentially perpetuating and amplifying these biases.

Since its inception, Wikipedia has been committed to providing content that is both accurate and free from biases. This commitment is underscored by policies such as verifiability, which mandates that all information on Wikipedia be sourced from reputable, independent sources known for their factual accuracy and commitment to fact-checking.[3] Additionally, Wikipedia has a neutral point of view (NPOV) policy, which requires that articles are “written with a tone that provides an unbiased, accurate, and proportionate representation of all positions included in the article.”[4]

Many of Wikipedia’s articles cover noncontroversial topics and are supported by a wealth of objective information from several sources, so achieving NPOV is ostensibly straightforward. However, attaining NPOV is far more challenging for articles on political or value-laden topics, on which the debates are often subjective, intractable, or controversial, and sources are often challenging to verify conclusively.

The question of political bias in Wikipedia has been the subject of previous scholarly investigations. In 2012, a pioneering study using a sample of more than 20,000 English-language Wikipedia entries on U.S. political topics provided the first evidence that political articles on the site generally leaned pro-Democratic.[5] The authors compared the frequency with which Wikipedia articles mentioned terms favored by congressional Democrats (e.g., estate tax, Iraq war) versus terms favored by Republicans (e.g., death tax, war on terror) and found that the former appeared more frequently. This study was replicated by its authors in 2018, yielding similar results.[6] Other research focusing on Wikipedia’s arbitration process in editorially disputed articles has documented biases in judgments about sources and in enforcement, in a manner that shows institutional favoritism toward left-of-center viewpoints.[7]

Other research has questioned whether, and in which direction, Wikipedia’s content is biased. Some reports suggest that at least some political content in Wikipedia is biased against left-of-center politicians.[8] There is also research suggesting that Wikipedia’s content is mostly accurate and on par with the quality of articles from Encyclopaedia Britannica.[9]

Anecdotally, Larry Sanger, one of Wikipedia’s cofounders, has said publicly that he believes that the site has a high degree of political bias in favor of a left-leaning, liberal, or “establishment” perspective. Sanger has accused Wikipedia of abandoning its neutrality policy and, consequently, he regards Wikipedia as unreliable.[10]

The goal of this report is to complement the literature on Wikipedia’s political bias by using a novel methodology—computational content analysis using modern LLMs for content annotation—to assess quantitatively whether there is political bias in Wikipedia’s content. Specifically, we computationally assess the sentiment and emotional tone associated with politically charged terms—those referring to politically aligned public figures and institutions—within Wikipedia articles.

We first gather a set of target terms (N=1,628) with political connotations (e.g., names of recent U.S. presidents, U.S. congressmembers, U.S. Supreme Court justices, or prime ministers of Western countries) from external sources. We then identify all mentions in English-language Wikipedia articles of those terms.

We then extract the paragraphs in which those terms occur to provide the context in which the target terms are used and feed a random sample of those text snippets to an LLM (OpenAI’s gpt-3.5-turbo), which annotates the sentiment/emotion with which the target term is used in the snippet. To our knowledge, this is the first analysis of political bias in Wikipedia content using modern LLMs for annotation of sentiment/emotion.

In general, we find that Wikipedia articles tend to associate right-of-center public figures with somewhat more negative sentiment than left-of-center public figures; this trend can be seen in mentions of U.S. presidents, Supreme Court justices, congressmembers, state governors, leaders of Western countries, and prominent U.S.-based journalists and media organizations. We also find prevailing associations of negative emotions (e.g., anger and disgust) with right-leaning public figures and positive emotions (e.g., joy) with left-leaning public figures. In some categories of terms, such as the names of U.K. MPs and U.S.-based think tanks, we find no evidence of a difference in sentiment.

Our results suggest that Wikipedia is not living up to its stated neutral–point–of–view policy. This is concerning because we find evidence of some of Wikipedia’s prevailing sentiment associations for politically aligned public figures also popping up in OpenAI’s language models, which suggests that the political bias that we identify on the site may be percolating into widely used AI systems.

Results

Before turning to the common political terms analyzed, we first validate our methodology (for specific methodological details, see Appendix) by applying it to terms that are widely accepted to have either negative or positive connotations. As Figure 1 shows, we find that terms that nearly all would agree have positive associations—such as prosperity, compassion, Martin Luther King, and Nelson Mandela—are generally used in Wikipedia articles with positive sentiment; and terms with widely accepted negative connotations—such as disease, corruption, and Joseph Goebbels—are generally used with mostly negative sentiment.

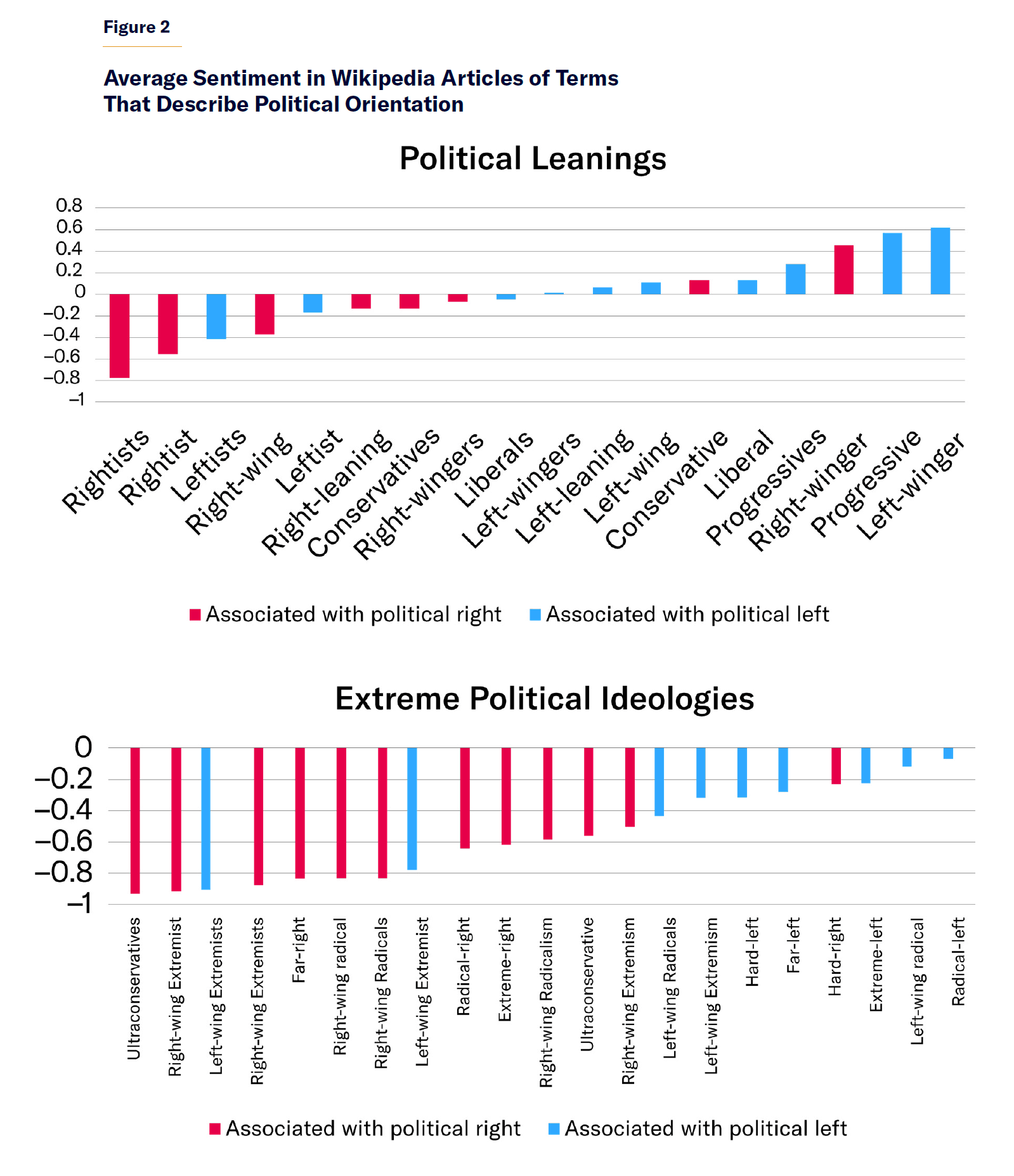

Next, we analyze how a set of terms that denote political orientation and political extremism are used in Wikipedia articles (Figure 2). The sample set of terms is small, but there is a mild tendency for terms associated with a right-leaning political orientation to be used with more negative sentiment than similar terms for a left-leaning political orientation.

tes

Comments are closed.